Publication:

Papers

>

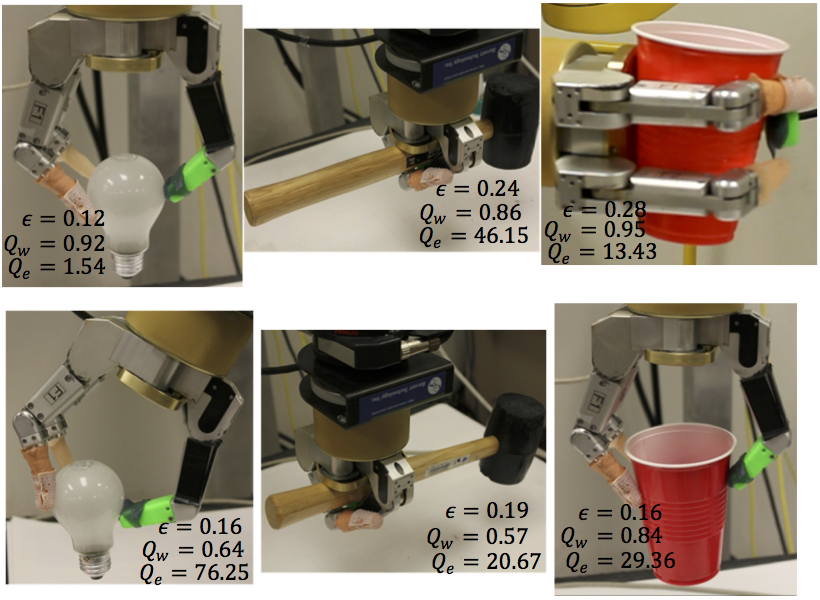

Lin, Y. and Sun, Y., (2015) Grasp Planning to Maximize Task Coverage, Intl. Journal of Robotics Research, vol. 34 no. 9 1195-1210.

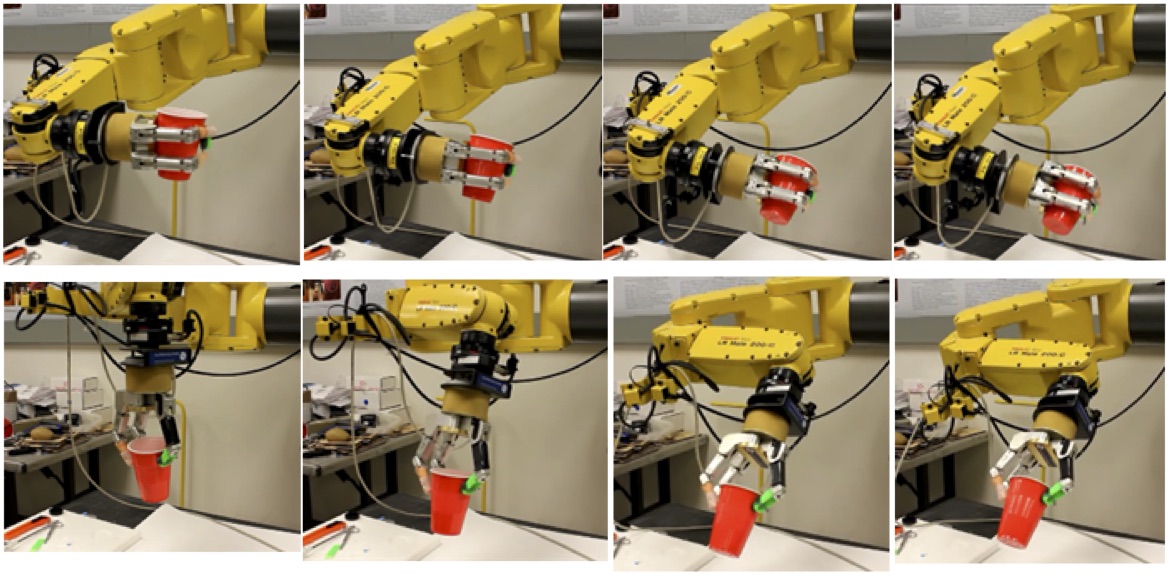

Lin, Y., and Sun, Y. (2015) Robot Grasp Planning Based on Demonstrated Grasp Strategies, Intl. Journal of Robotics Research, 34(1): 26-42.

Sun, Y., Yun Lin, and Yongqiang Huang (2016) Robotic Grasping for Instrument Manipulations, URAI, 1-3 (invited)

Lin, Y. and Sun, Y. (2015) Task-Based Grasp Quality Measures for Grasp Synthesis, IROS, 485-490.

Lin, Y., Sun, Y. (2014) Grasp Planning Based on Grasp Strategy Extraction from Demonstration, IROS, pp. 4458-4463.

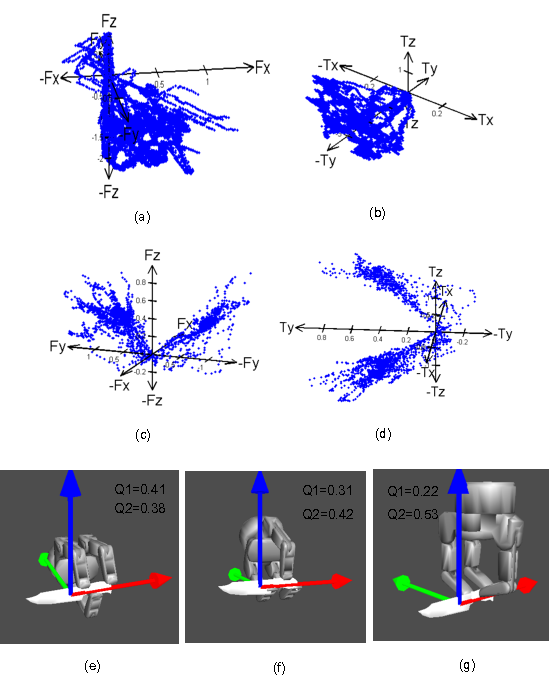

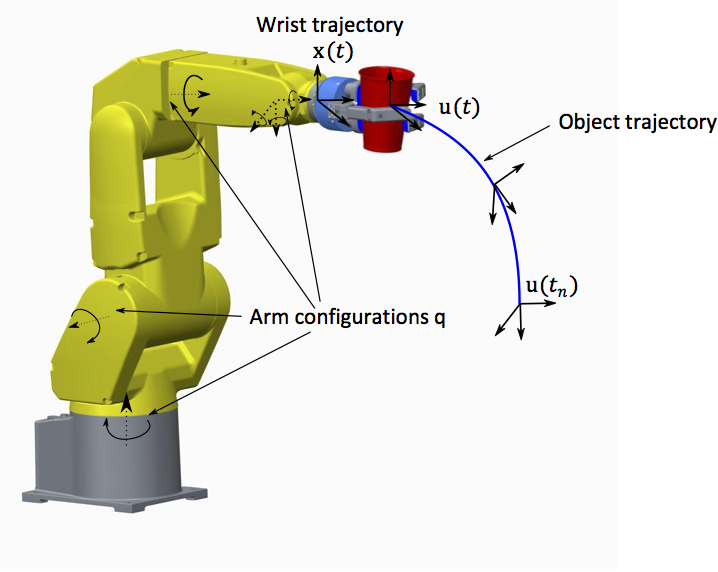

Lin, Y. and Sun, Y., 2016. Task-oriented grasp planning based on disturbance distribution. In Robotics Research (pp. 577-592). Springer International Publishing. (presented at ISRR)

Lin Y., Ren S., Clevenger M., and Sun Y. (2012) Learning Grasping Force from Demonstration, IEEE Intl. Conference on Robotics and Automation (ICRA), pp. 1526-1531.

Patents

Y. Sun, Y. Lin, Systems and Methods for Planning a Robot Grasp Based upon a Demonstration Grasp, US patent #9,321,176 Issued on April 26, 2016.

This work is supported by NSF. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.